Customer Segmentation Using K-Means Clustering

In this project walkthrough, we’ll explore how to segment credit card customers using unsupervised machine learning. By analyzing customer behavior and demographic data, we’ll identify distinct customer groups to help a credit card company develop targeted marketing strategies and improve their bottom line.

Customer segmentation is a powerful technique used by businesses to understand their customers better. By grouping similar customers together, companies can tailor their marketing efforts, product offerings, and customer service strategies to meet the specific needs of each segment, ultimately leading to increased customer satisfaction and revenue.

In this tutorial, we’ll take you through the complete machine learning workflow, from exploratory data analysis to model building and interpretation of results.

What You’ll Learn

By the end of this tutorial, you’ll know how to:

- Perform exploratory data analysis on customer data

- Transform categorical variables for machine learning algorithms

- Use K-means clustering to segment customers

- Apply the elbow method to determine the optimal number of clusters

- Interpret and visualize clustering results for actionable insights

Before You Start: Pre-Instruction

To make the most of this project walkthrough, follow these preparatory steps:

- Review the Project

Access the project and familiarize yourself with the goals and structure: Customer Segmentation Project. - Prepare Your Environment

- If you’re using the Dataquest platform, everything is already set up for you.

- If you’re working locally, ensure you have Python and Jupyter Notebook installed, along with the required libraries:

pandas,numpy,matplotlib,seaborn, andsklearn. - To work on this project, you’ll need the

customer_segmentation.csvdataset, which contains information about the company’s clients, and we’re asked to help segment them into different groups in order to apply different business strategies for each type of customer.

- Get Comfortable with Jupyter

- New to Markdown? We recommend learning the basics to format headers and add context to your Jupyter notebook: Markdown Guide.

- For file sharing and project uploads, create a GitHub account.

Setting Up Your Environment

Before we dive into creating our clustering model, let’s review how to use Jupyter Notebook and set up the required libraries for this project.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.preprocessing import StandardScaler

from sklearn.cluster import KMeansLearning Insight: When working with scikit-learn, it’s common practice to import specific functions or classes rather than the entire library. This approach keeps your code clean and focused, while also making it clear which tools you’re using for each step of your analysis.

Now let’s load our customer data and take a first look at what we’re working with:

df = pd.read_csv('customer_segmentation.csv')

df.head()| customer_id | age | gender | dependent_count | education_level | marital_status | estimated_income | months_on_book | total_relationship_count | months_inactive_12_mon | credit_limit | total_trans_amount | total_trans_count | avg_utilization_ratio |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 768805383 | 45 | M | 3 | High School | Married | 69000 | 39 | 5 | 1 | 12691.0 | 1144 | 42 | 0.061 |

| 818770008 | 49 | F | 5 | Graduate | Single | 24000 | 44 | 6 | 1 | 8256.0 | 1291 | 33 | 0.105 |

| 713982108 | 51 | M | 3 | Graduate | Married | 93000 | 36 | 4 | 1 | 3418.0 | 1887 | 20 | 0.000 |

| 769911858 | 40 | F | 4 | High School | Unknown | 37000 | 34 | 3 | 4 | 3313.0 | 1171 | 20 | 0.760 |

| 709106358 | 40 | M | 3 | Uneducated | Married | 65000 | 21 | 5 | 1 | 4716.0 | 816 | 28 | 0.000 |

Understanding the Dataset

Let’s better understand our dataset by examining its structure and checking for missing values:

df.info()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 10127 entries, 0 to 10126

Data columns (total 14 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 customer_id 10127 non-null int64

1 age 10127 non-null int64

2 gender 10127 non-null object

3 dependent_count 10127 non-null int64

4 education_level 10127 non-null object

5 marital_status 10127 non-null object

6 estimated_income 10127 non-null int64

7 months_on_book 10127 non-null int64

8 total_relationship_count 10127 non-null int64

9 months_inactive_12_mon 10127 non-null int64

10 credit_limit 10127 non-null float64

11 total_trans_amount 10127 non-null int64

12 total_trans_count 10127 non-null int64

13 avg_utilization_ratio 10127 non-null float64

dtypes: float64(2), int64(9), object(3)

memory usage: 1.1+ MBOur dataset contains 10,127 customer records with 14 variables. Fortunately, there are no missing values, which simplifies our data preparation process. Let’s understand what each of these variables represents:

- customer_id: Unique identifier for each customer

- age: Customer’s age in years

- gender: Customer’s gender (M/F)

- dependent_count: Number of dependents (e.g., children)

- education_level: Customer’s education level

- marital_status: Customer’s marital status

- estimated_income: Estimated annual income in dollars

- months_on_book: How long the customer has been with the credit card company

- total_relationship_count: Number of times the customer has contacted the company

- months_inactive_12_mon: Number of months the customer didn’t use their card in the past 12 months

- credit_limit: Credit card limit in dollars

- total_trans_amount: Total amount spent on the credit card

- total_trans_count: Total number of transactions

- avg_utilization_ratio: Average card utilization ratio (how much of their available credit they use)

Before we dive deeper into the analysis, let’s check the distribution of our categorical variables:

print(df['marital_status'].value_counts(), end="\n\n")

print(df['gender'].value_counts(), end="\n\n")

df['education_level'].value_counts()marital_status

Married 4687

Single 3943

Unknown 749

Divorced 748

Name: count, dtype: int64

gender

F 5358

M 4769

Name: count, dtype: int64

education_level

Graduate 3685

High School 2351

Uneducated 1755

College 1192

Post-Graduate 616

Doctorate 528

Name: count, dtype: int64About half of the customers are married, followed closely by single customers, with smaller numbers of customers with unknown marital status or divorced.

The gender distribution is fairly balanced, with a slight majority of female customers (about 53%) compared to male customers (about 47%).

The education level variable shows that most customers have a graduate or high school education, followed by a substantial portion who are uneducated. Smaller segments have attended college, achieved post-graduate degrees, or earned a doctorate. This suggests a wide range of educational backgrounds, with a majority concentrated in mid-level educational attainment.

Exploratory Data Analysis (EDA)

Now let’s explore the numerical variables in our dataset to understand their distributions:

df.describe()This gives us a statistical summary of our numerical variables, including counts, means, standard deviations, and quantiles:

| customer_id | age | dependent_count | estimated_income | months_on_book | total_relationship_count | months_inactive_12_mon | credit_limit | total_trans_amount | total_trans_count | avg_utilization_ratio | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 1.012700e+04 | 10127.000000 | 10127.000000 | 10127.000000 | 10127.000000 | 10127.000000 | 10127.000000 | 10127.000000 | 10127.000000 | 10127.000000 | 10127.000000 |

| mean | 7.391776e+08 | 46.325960 | 2.346203 | 62078.206774 | 35.928409 | 3.812580 | 2.341167 | 8631.953698 | 4404.086304 | 64.858695 | 0.274894 |

| std | 3.690378e+07 | 8.016814 | 1.298908 | 39372.861291 | 7.986416 | 1.554408 | 1.010622 | 9088.776650 | 3397.129254 | 23.472570 | 0.275691 |

| min | 7.080821e+08 | 26.000000 | 0.000000 | 20000.000000 | 13.000000 | 1.000000 | 0.000000 | 1438.300000 | 510.000000 | 10.000000 | 0.000000 |

| 25% | 7.130368e+08 | 41.000000 | 1.000000 | 32000.000000 | 31.000000 | 3.000000 | 2.000000 | 2555.000000 | 2155.500000 | 45.000000 | 0.023000 |

| 50% | 7.179264e+08 | 46.000000 | 2.000000 | 50000.000000 | 36.000000 | 4.000000 | 2.000000 | 4549.000000 | 3899.000000 | 67.000000 | 0.176000 |

| 75% | 7.731435e+08 | 52.000000 | 3.000000 | 80000.000000 | 40.000000 | 5.000000 | 3.000000 | 11067.500000 | 4741.000000 | 81.000000 | 0.503000 |

| max | 8.283431e+08 | 73.000000 | 5.000000 | 200000.000000 | 56.000000 | 6.000000 | 6.000000 | 34516.000000 | 18484.000000 | 139.000000 | 0.999000 |

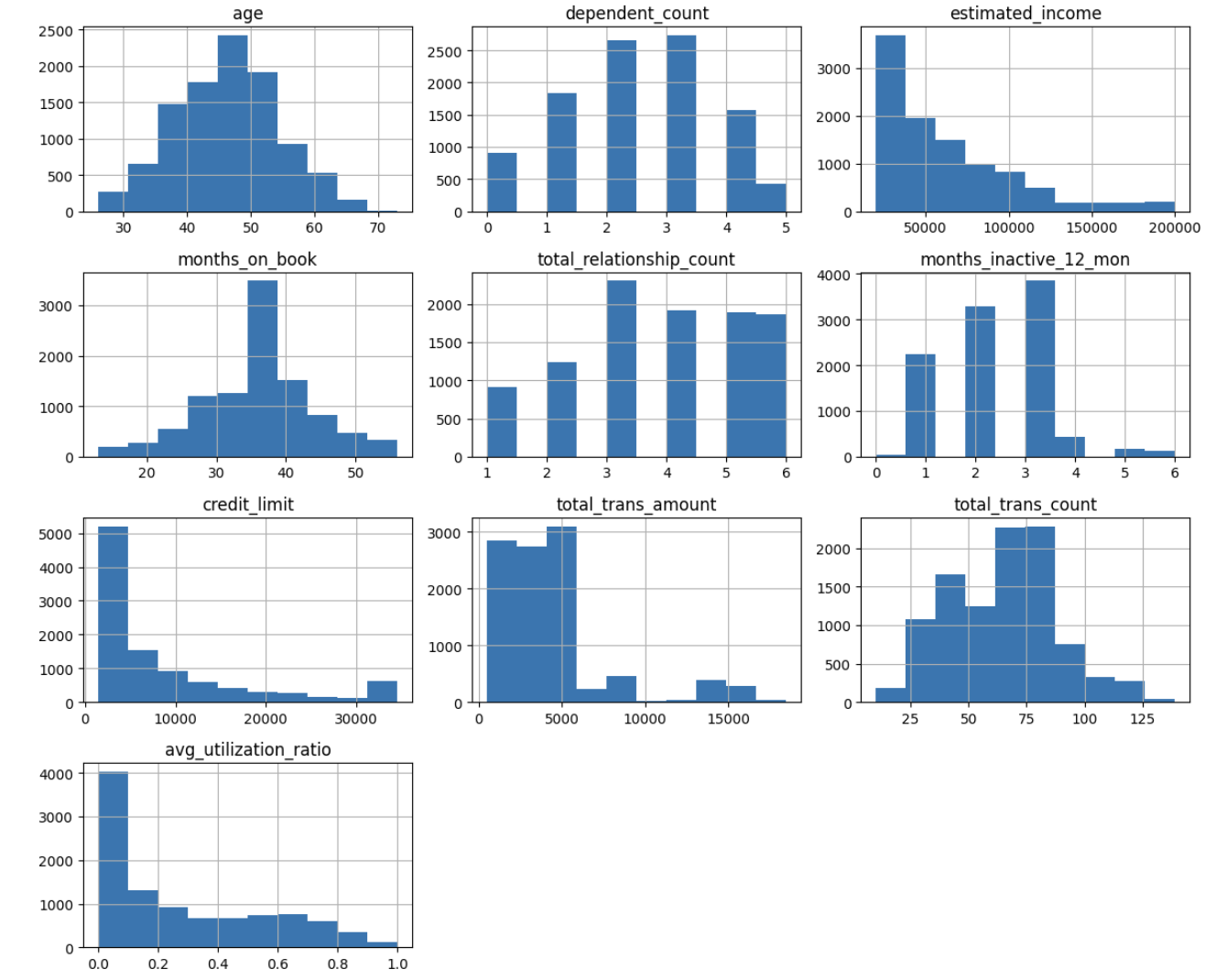

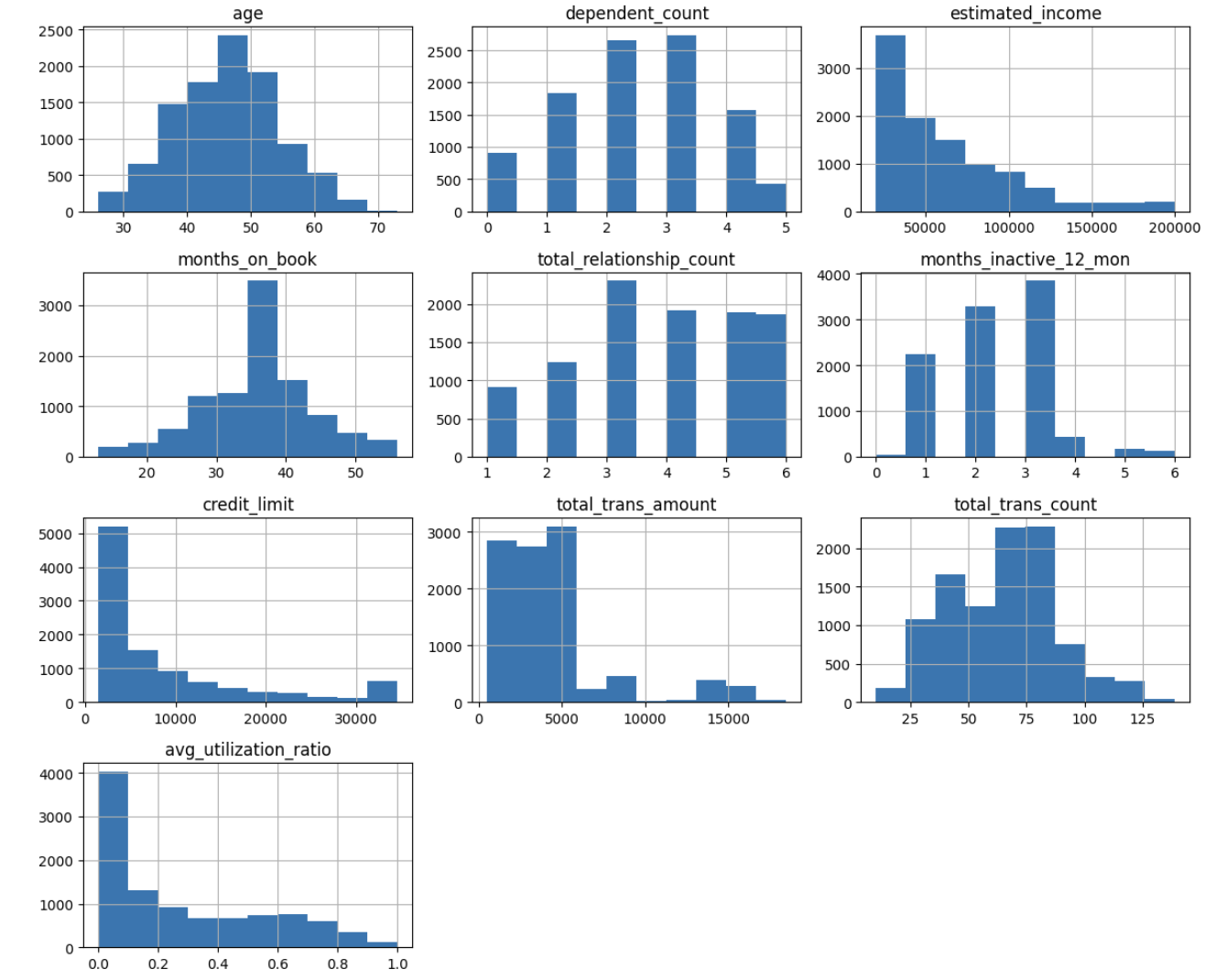

To make it easier to spot patterns, let’s visualize the distribution of each variable using histograms:

fig, ax = plt.subplots(figsize=(12, 10))

# Removing the customer's id before plotting the distributions

df.drop('customer_id', axis=1).hist(ax=ax)

plt.tight_layout()

plt.show()

Learning Insight: When working with Jupyter and

matplotlib, you might see warning messages about multiple subplots. These are generally harmless and just inform you thatmatplotlibis handling some aspects of the plot creation automatically. For a portfolio project, you might want to refine your code to eliminate these warnings, but they don’t affect the functionality or accuracy of your analysis.

From these histograms, we can observe:

- Age: Fairly normally distributed, concentrated between 40-55 years

- Dependent Count: Most customers have 0-3 dependents

- Estimated Income: Right-skewed, with most customers having incomes below \$100,000

- Months on Book: Normally distributed, centered around 36 months

- Total Relationship Count: Most customers have 3-5 contacts with the company

- Credit Limit: Right-skewed, with most customers having a credit limit below \$10,000

- Transaction Metrics: Both amount and count show some right skew

- Utilization Ratio: Many customers have very low utilization (near 0), with a smaller group having high utilization

Next, let’s look at correlations between variables to understand relationships within our data and visualize them using a heatmap:

correlations = df.drop('customer_id', axis=1).corr(numeric_only=True)

fig, ax = plt.subplots(figsize=(12,8))

sns.heatmap(correlations[(correlations > 0.30) | (correlations < -0.30)],

cmap='Blues', annot=True, ax=ax)

plt.tight_layout()

plt.show()

Learning Insight: When creating correlation heatmaps, filtering to show only stronger correlations (e.g., those above 0.3 or below -0.3) can make the visualization much more readable and help you focus on the most important relationships in your data.

The correlation heatmap reveals several interesting relationships:

- Age and Months on Book: Strong positive correlation (0.79), suggesting older customers have been with the company longer

- Credit Limit and Estimated Income: Positive correlation (0.52), which makes sense as higher income typically qualifies for higher credit limits

- Transaction Amount and Count: Strong positive correlation (0.81), meaning customers who make more transactions also spend more overall

- Credit Limit and Utilization Ratio: Negative correlation (-0.48), suggesting customers with higher credit limits tend to use a smaller percentage of their available credit

- Relationship Count and Transaction Amount: Negative correlation (-0.35), interestingly indicating that customers who contact the company more tend to spend less

These relationships will be valuable to consider as we interpret our clustering results later.

Feature Engineering

Before we can apply K-means clustering, we need to transform our categorical variables into numerical representations. K-means operates by calculating distances between points in a multi-dimensional space, so all features must be numeric.

Let’s handle each categorical variable appropriately:

1. Gender Transformation

Since gender is binary in this dataset (M/F), we can use a simple mapping:

customers_modif = df.copy()

customers_modif['gender'] = df['gender'].apply(lambda x: 1 if x == 'M' else 0)

customers_modif.head()Learning Insight: When a categorical variable has only two categories, you can use a simple binary encoding (0/1) rather than one-hot encoding. This reduces the dimensionality of your data and can lead to more interpretable models.

2. Education Level Transformation

Education level has a natural ordering (uneducated < high school < college, etc.), so we can use ordinal encoding:

education_mapping = {'Uneducated': 0, 'High School': 1, 'College': 2,

'Graduate': 3, 'Post-Graduate': 4, 'Doctorate': 5}

customers_modif['education_level'] = customers_modif['education_level'].map(education_mapping)

customers_modif.head()3. Marital Status Transformation

Marital status doesn’t have a natural ordering, and it has more than two categories, so we’ll use one-hot encoding:

dummies = pd.get_dummies(customers_modif[['marital_status']])

customers_modif = pd.concat([customers_modif, dummies], axis=1)

customers_modif.drop(['marital_status'], axis=1, inplace=True)

print(customers_modif.info())

customers_modif.head()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 10127 entries, 0 to 10126

Data columns (total 17 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 customer_id 10127 non-null int64

1 age 10127 non-null int64

2 gender 10127 non-null int64

3 dependent_count 10127 non-null int64

4 education_level 10127 non-null int64

5 estimated_income 10127 non-null int64

6 months_on_book 10127 non-null int64

7 total_relationship_count 10127 non-null int64

8 months_inactive_12_mon 10127 non-null int64

9 credit_limit 10127 non-null float64

10 total_trans_amount 10127 non-null int64

11 total_trans_count 10127 non-null int64

12 avg_utilization_ratio 10127 non-null float64

13 marital_status_Divorced 10127 non-null bool

14 marital_status_Married 10127 non-null bool

15 marital_status_Single 10127 non-null bool

16 marital_status_Unknown 10127 non-null bool

dtypes: bool(4), float64(2), int64(11)

memory usage: 1.0 MBLearning Insight: One-hot encoding creates a new binary column for each category, which can lead to an “implicit weighting” effect if a variable has many categories. This is something to be aware of when interpreting clustering results, as it can sometimes cause the algorithm to prioritize variables with more categories.

Now our data is fully numeric and ready for scaling and clustering.

Scaling the Data

K-means clustering uses distance-based calculations, so it’s important to scale our features to ensure that variables with larger ranges (like income) don’t dominate the clustering process over variables with smaller ranges (like age).

X = customers_modif.drop('customer_id', axis=1)

scaler = StandardScaler()

scaler.fit(X)

X_scaled = scaler.transform(X)Learning Insight:

StandardScalertransforms each feature to have a mean of 0 and a standard deviation of 1. This puts all features on an equal footing, regardless of their original scales. For K-means clustering, this is what ensures that each feature contributes equally to the distance calculations.

Finding the Optimal Number of Clusters

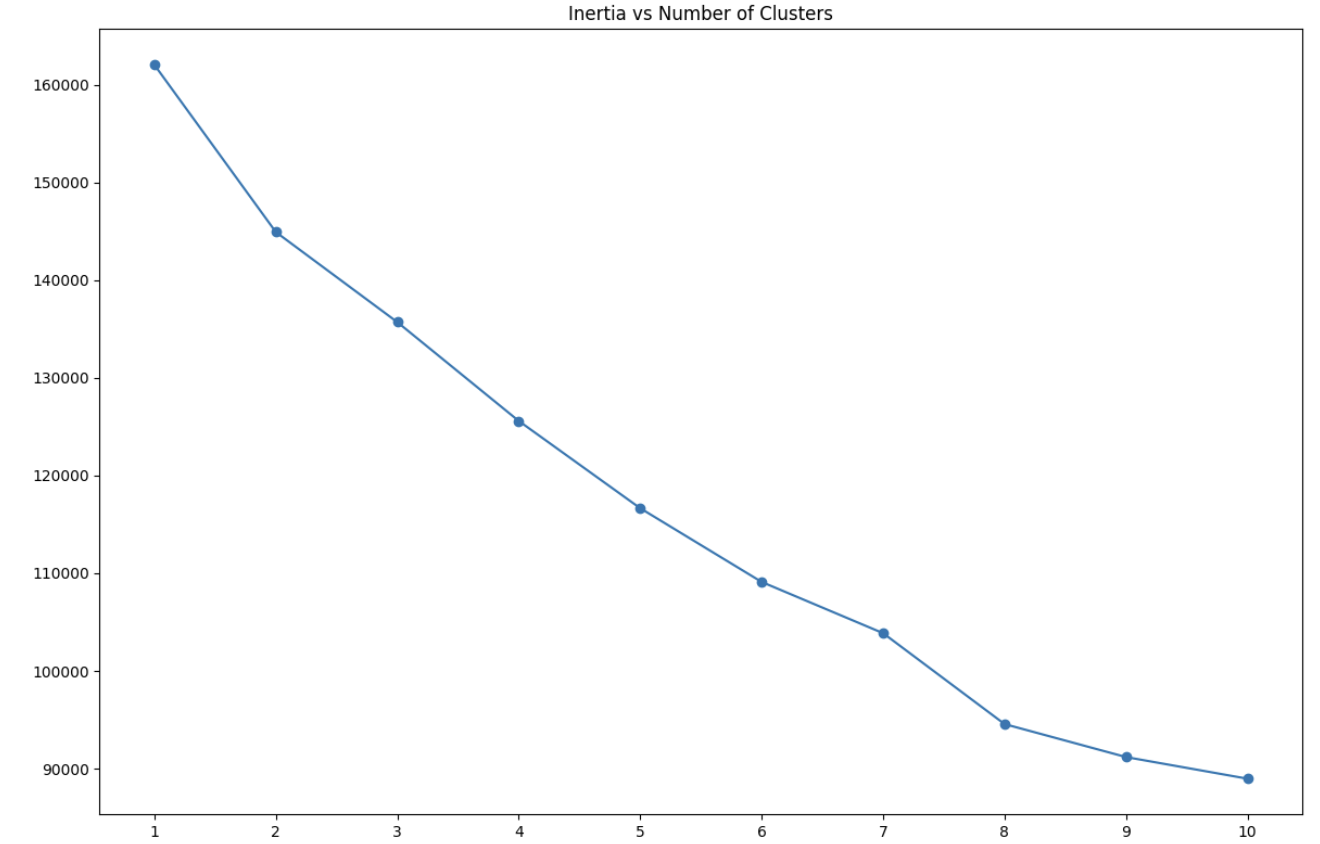

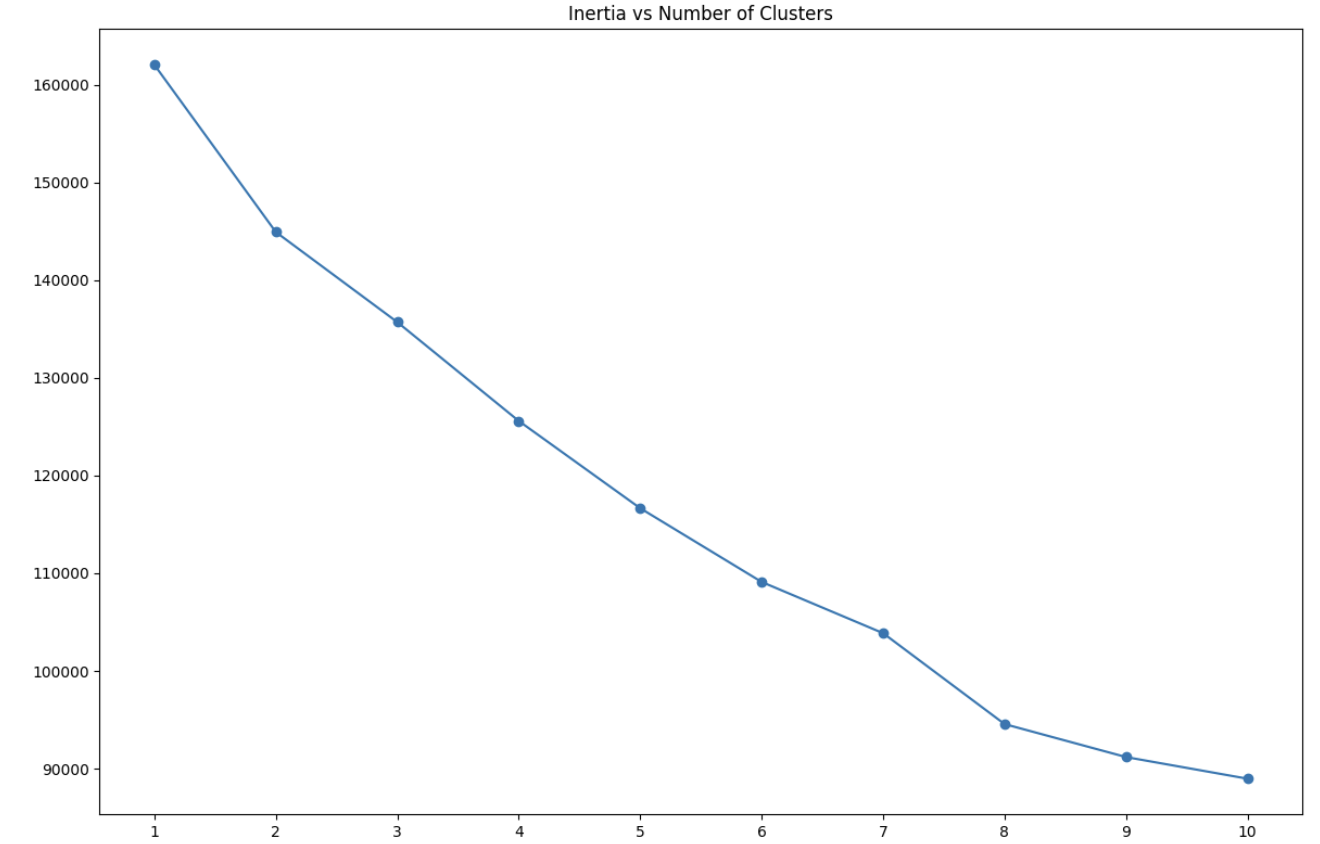

One of the challenges with K-means clustering is determining the optimal number of clusters. The elbow method is a common approach, where we plot the sum of squared distances (inertia) for different numbers of clusters and look for an “elbow” point where the rate of decrease sharply changes.

X = pd.DataFrame(X_scaled)

inertias = []

for k in range(1, 11):

model = KMeans(n_clusters=k)

y = model.fit_predict(X)

inertias.append(model.inertia_)

plt.figure(figsize=(12, 8))

plt.plot(range(1, 11), inertias, marker='o')

plt.xticks(ticks=range(1, 11), labels=range(1, 11))

plt.title('Inertia vs Number of Clusters')

plt.tight_layout()

plt.show()

Learning Insight: The elbow method isn’t always crystal clear, and there’s often some judgment involved in selecting the “right” number of clusters. Consider running the clustering multiple times with different numbers of clusters and evaluating which solution provides the most actionable insights for your business context.

In our case, the plot suggests that around 5-8 clusters could be appropriate, as the decrease in inertia begins to level off in this range. For this analysis, we’ll choose 8 clusters, as it appears to strike a good balance between detail and interpretability.

Building the K-Means Clustering Model

Now that we’ve determined the optimal number of clusters, let’s build our K-means model:

model = KMeans(n_clusters=8)

y = model.fit_predict(X_scaled)

# Adding the cluster assignments to our original dataframe

df['CLUSTER'] = y + 1 # Adding 1 to make clusters 1-based instead of 0-based

df.head()Let’s check how many customers we have in each cluster:

df['CLUSTER'].value_counts()CLUSTER

5 2015

7 1910

2 1577

1 1320

4 1045

6 794

3 736

8 730

Name: count, dtype: int64Our clusters have reasonably balanced sizes, with no single cluster dominating the others.

Analyzing the Clusters

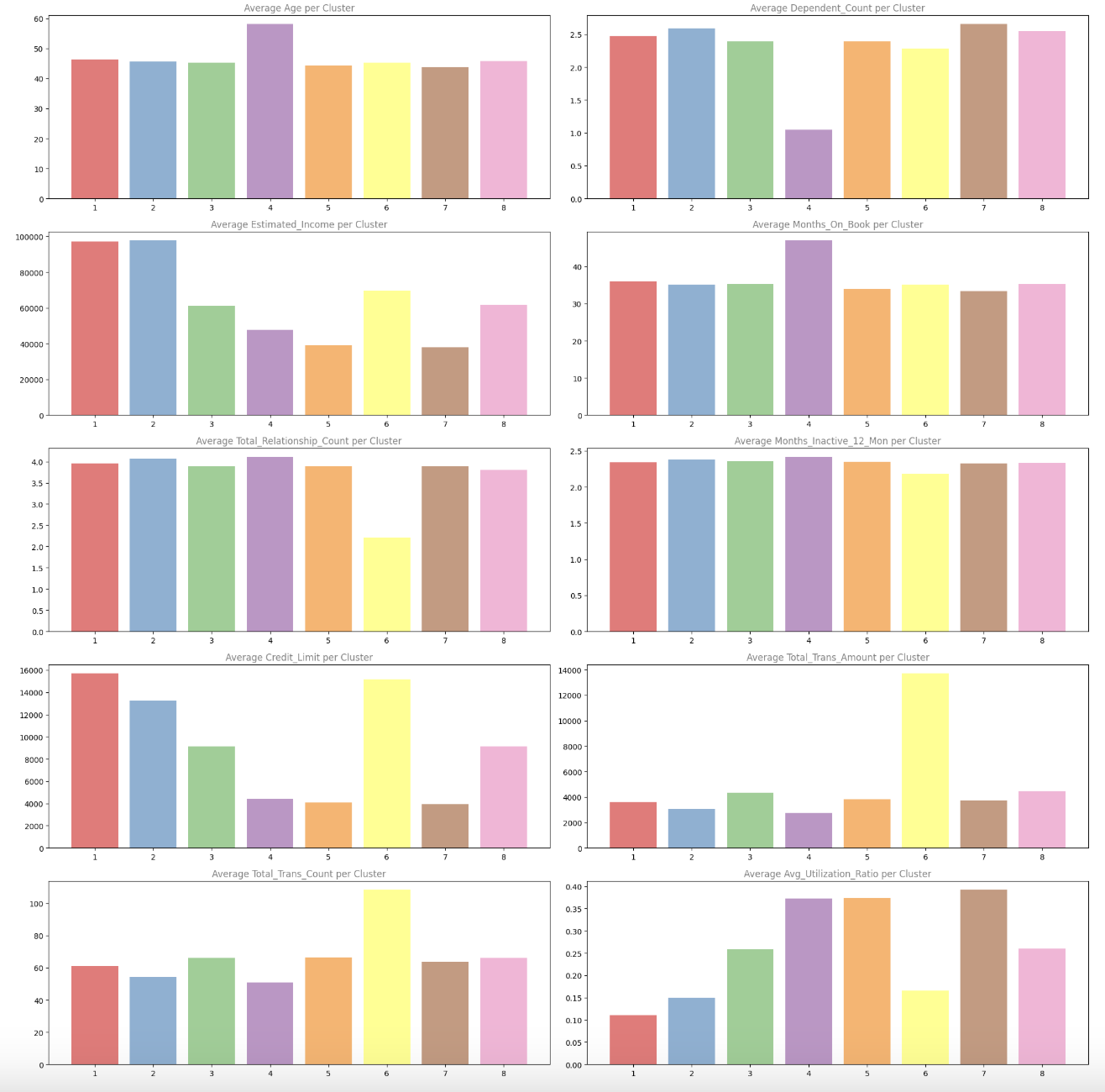

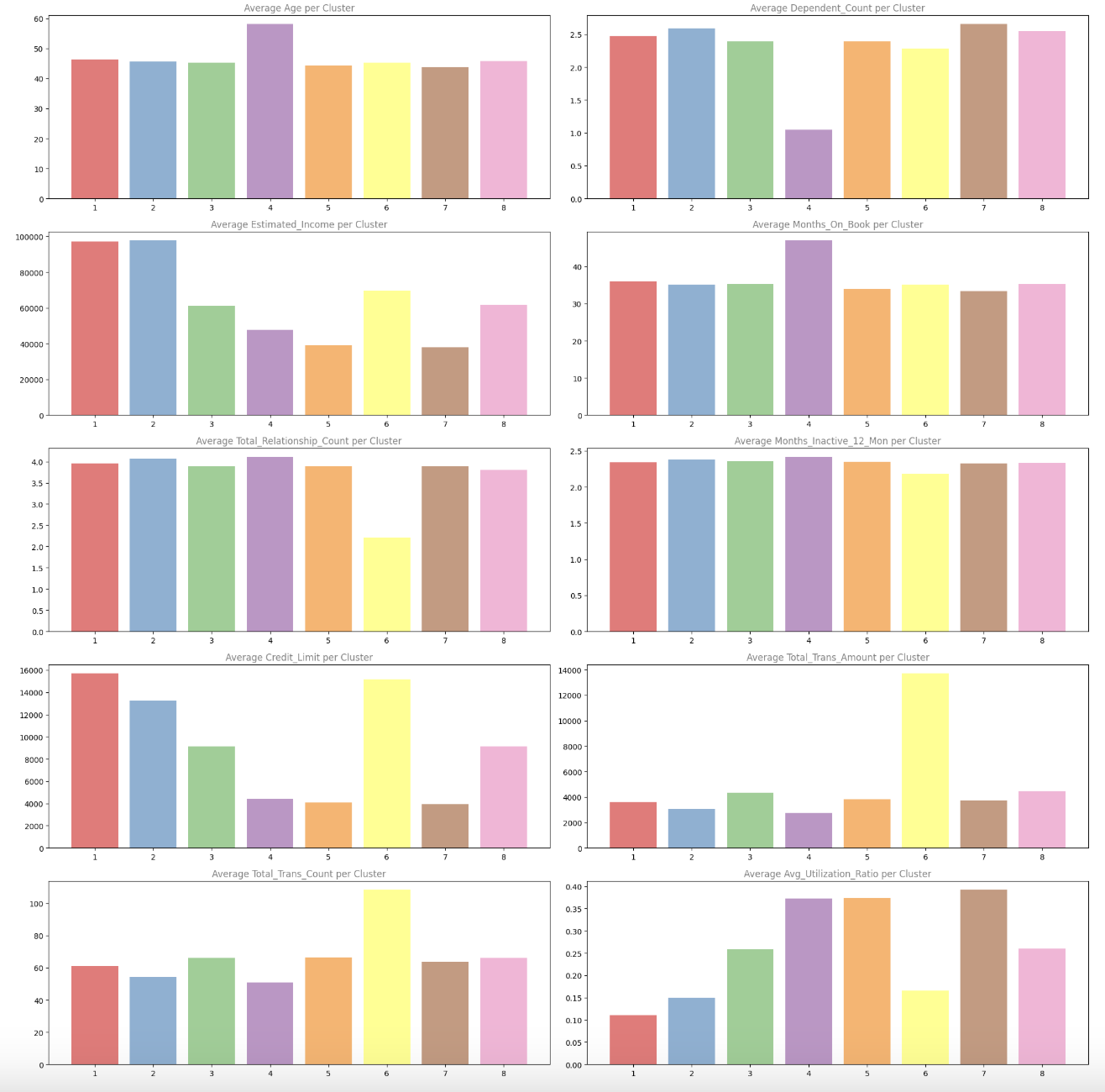

Now that we’ve created our customer segments, let’s analyze them to understand what makes each cluster unique. We’ll start by examining the average values of numeric variables for each cluster:

numeric_columns = df.select_dtypes(include=np.number).drop(['customer_id', 'CLUSTER'], axis=1).columns

fig = plt.figure(figsize=(20, 20))

for i, column in enumerate(numeric_columns):

df_plot = df.groupby('CLUSTER')[column].mean()

ax = fig.add_subplot(5, 2, i+1)

ax.bar(df_plot.index, df_plot, color=sns.color_palette('Set1'), alpha=0.6)

ax.set_title(f'Average {column.title()} per Cluster', alpha=0.5)

ax.xaxis.grid(False)

plt.tight_layout()

plt.show()

These bar charts help us understand how each variable differs across clusters. For example, we can see:

- Estimated Income: Clusters 1 and 2 have significantly higher average incomes

- Credit Limit: Similarly, Clusters 1 and 2 have higher credit limits

- Transaction Metrics: Cluster 6 stands out with much higher transaction amounts and counts

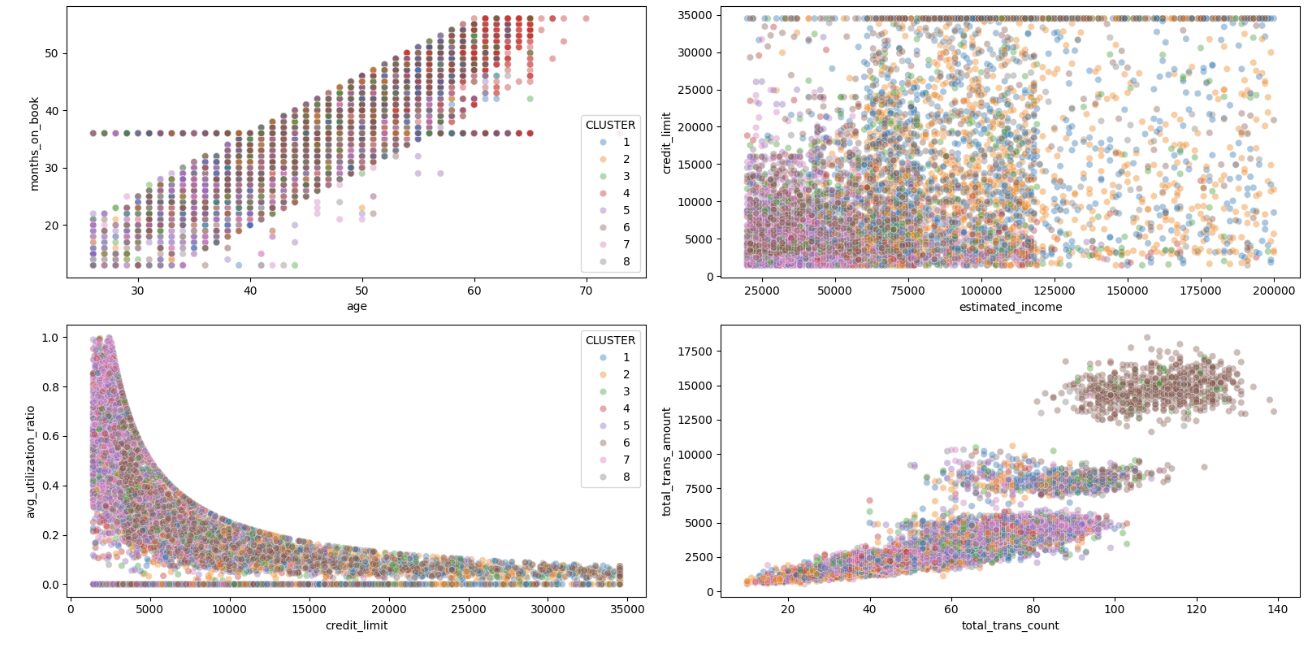

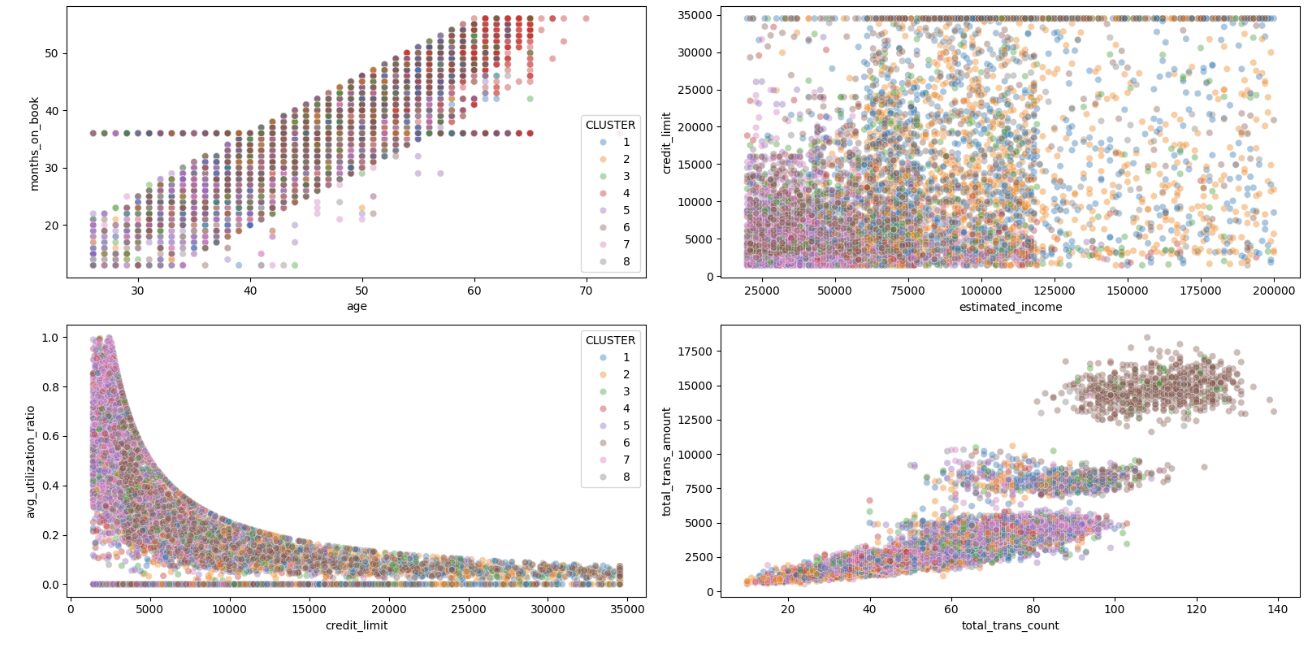

Let’s also look at how the clusters appear in scatter plots of key variables:

fig, ((ax1, ax2), (ax3, ax4)) = plt.subplots(2, 2, figsize=(16, 8))

sns.scatterplot(x='age', y='months_on_book', hue='CLUSTER', data=df, palette='tab10', alpha=0.4, ax=ax1)

sns.scatterplot(x='estimated_income', y='credit_limit', hue='CLUSTER', data=df, palette='tab10', alpha=0.4, ax=ax2, legend=False)

sns.scatterplot(x='credit_limit', y='avg_utilization_ratio', hue='CLUSTER', data=df, palette='tab10', alpha=0.4, ax=ax3)

sns.scatterplot(x='total_trans_count', y='total_trans_amount', hue='CLUSTER', data=df, palette='tab10', alpha=0.4, ax=ax4, legend=False)

plt.tight_layout()

plt.show()

The scatter plots reveal some interesting patterns:

- In the Credit Limit vs. Utilization Ratio plot, we can see distinct clusters with different behaviors – some have high credit limits but low utilization, while others have lower limits but higher utilization

- The Transaction Count vs. Amount plot shows Cluster 6 as a distinct group with high transaction activity

- The Age vs. Months on Book plot shows the expected positive correlation, but with some interesting cluster separations

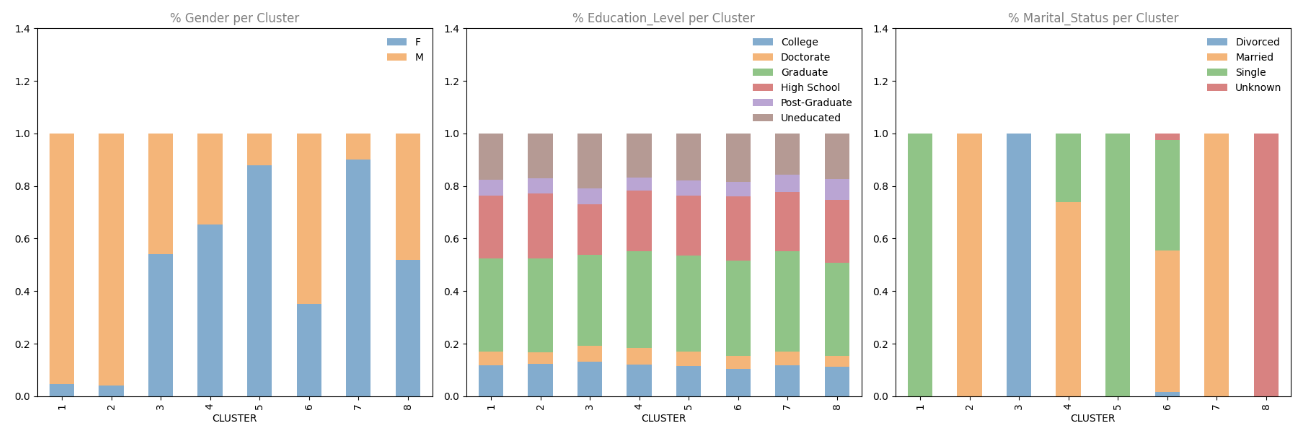

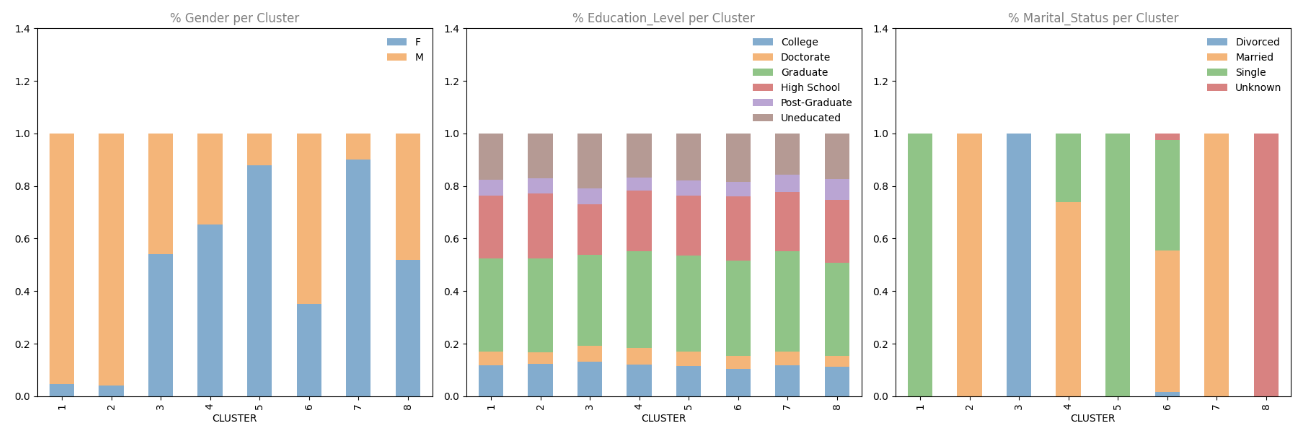

Finally, let’s examine the distribution of categorical variables across clusters:

cat_columns = df.select_dtypes(include=['object'])

fig = plt.figure(figsize=(18, 6))

for i, col in enumerate(cat_columns):

plot_df = pd.crosstab(index=df['CLUSTER'], columns=df[col], values=df[col], aggfunc='size', normalize='index')

ax = fig.add_subplot(1, 3, i+1)

plot_df.plot.bar(stacked=True, ax=ax, alpha=0.6)

ax.set_title(f'% {col.title()} per Cluster', alpha=0.5)

ax.set_ylim(0, 1.4)

ax.legend(frameon=False)

ax.xaxis.grid(False)

plt.tight_layout()

plt.show()

These stacked bar charts reveal some strong patterns:

- Gender: Some clusters are heavily skewed towards one gender (e.g., Clusters 5 and 7 are predominantly female)

- Marital Status: Certain clusters are strongly associated with specific marital statuses (e.g., Cluster 2 is mostly married, Cluster 5 is mostly single)

- Education Level: This shows more mixed patterns across clusters

Learning Insight: The strong influence of marital status on our clustering results might be partly due to the one-hot encoding we used, which created four separate columns for this variable. In future iterations, you might want to experiment with different encoding methods or scaling to see how it affects your results.

Customer Segment Profiles

Based on our analysis, we can create profiles for each customer segment, summarized in the table below:

| High-Income Single Males | • Predominantly male • Mostly single |

• High income (~\$100K) • High credit limit |

• Low credit card utilization (10%) | These customers have money to spend but aren’t using their cards much. The company could offer rewards or incentives specifically tailored to single professionals to encourage more card usage. | |

| Affluent Family Men | • Predominantly male • Married • Higher number of dependents (~2.5) |

• High income (~\$100K) • High credit limit |

• Low utilization ratio (15%) | These customers represent family-oriented high earners. Family-focused rewards programs or partnerships with family-friendly retailers could increase their card usage. | |

| Divorced Mid-Income Customers | • Mixed gender • Predominantly divorced |

• Average income and credit limit | • Average transaction patterns | This segment might respond well to financial planning services or stability-focused messaging as they navigate post-divorce finances. | |

| Older Loyal Customers | • 60% female • 70% married • Oldest average age (~60) |

• Lower credit limit • Higher utilization ratio |

• Longest relationship with the company • Few dependents |

These loyal customers might appreciate recognition programs and senior-focused benefits. | |

| Young Single Women | • 90% female • Predominantly single |

• Lowest average income (~\$40K) • Low credit limit |

• High utilization ratio | This segment might benefit from entry-level financial education and responsible credit usage programs. They might also be receptive to credit limit increase offers as their careers progress. | |

| Big Spenders | • 60% male • Mix of single and married |

• Above-average income (~\$70K) • High credit limit |

• Highest transaction count and amount by a large margin | These are the company’s most active customers. Premium rewards programs and exclusive perks could help maintain their high engagement. | |

| Family-Focused Women | • 90% female • Married • Highest number of dependents |

• Low income (~\$40K) • Low credit limit paired with high utilization |

• Moderate transaction patterns | This segment might respond well to family-oriented promotions and cash-back on everyday purchases like groceries and children’s items. | |

| Unknown Marital Status | • Mixed gender • All with unknown marital status |

• Average across most metrics | • No distinct patterns | This segment primarily exists due to missing data. The company should attempt to update these records to better categorize these customers. |

Challenges and Considerations

Our analysis revealed some interesting patterns, but also highlighted a potential issue with our approach. The strong influence of marital status on our clustering results suggests that our one-hot encoding of this variable might have given it more weight than intended. This “implicit weighting” effect is a common challenge when using one-hot encoding with K-means clustering.

For future iterations, we might consider:

- Alternative Encoding Methods: Try different approaches for categorical variables

- Remove Specific Categories: Test if removing the “Unknown” marital status changes the clustering patterns

- Different Distance Metrics: Experiment with alternative distance calculations for K-means

- Feature Selection: Explicitly choose which features to include in the clustering

Summary of Analysis

In this project, we’ve demonstrated how unsupervised machine learning can be used to segment customers based on their demographic and behavioral characteristics. These segments provide valuable insights that a credit card company can use to develop targeted marketing strategies and improve customer engagement.

Our analysis identified eight distinct customer segments, each with their own characteristics and opportunities for targeted marketing:

- High-income single males

- Affluent family men

- Divorced mid-income customers

- Older loyal customers

- Young single women

- Big spenders

- Family-focused women

- Unknown marital status (potential data issue)

These groupings can help the company tailor their marketing messages, rewards programs, and product offerings to the specific needs and behaviors of each customer segment, potentially leading to increased card usage, customer satisfaction, and revenue.

Next Steps

To take this analysis further, you might try your hand at these enhancements:

- Validate the Clusters: Use silhouette scores or other metrics to quantitatively evaluate the quality of the clusters

- Experiment with Different Algorithms: Try hierarchical clustering or DBSCAN as alternatives to K-means

- Include Additional Data: Incorporate more customer variables, such as spending categories or payment behaviors

- Temporal Analysis: Analyze how customers move between segments over time

If you’re new to Python and do not feel ready to start this project, begin with our Python Basics for Data Analysis skill path to build the foundational skills needed for this project. The course covers essential topics like loops, conditionals, and data manipulation with pandas that we’ve used extensively in this analysis. Once you’re comfortable with these concepts, come back to build your own customer segmentation model and take on the enhancement challenges!

Happy coding!

Source link